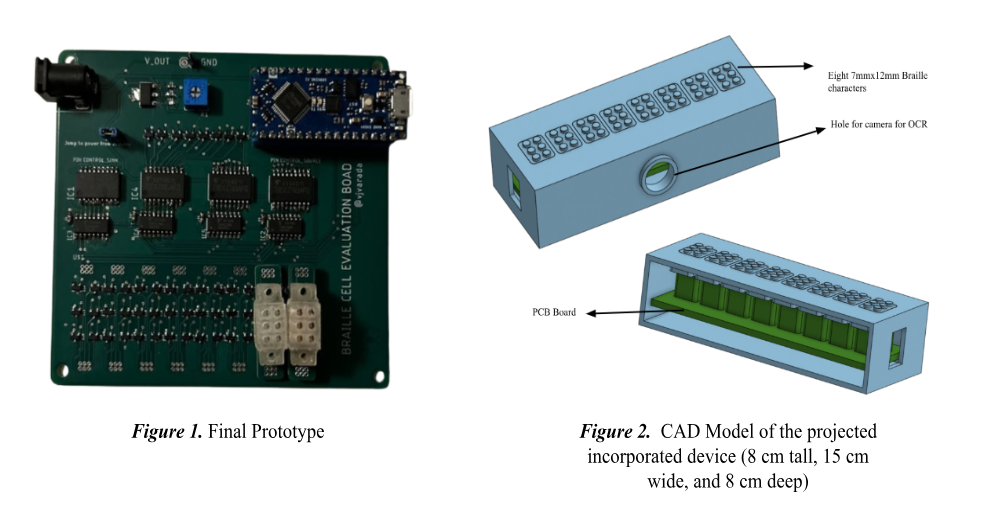

The final design prototype involves an affordable electromechanical refreshable braille display module using micro magnets and Optical Character Recognition (OCR) technology. The final prototype consists of a main computing microcontroller, a Raspberry Pi, a main PCB board from the design housing all the braille cells and separate PCB boards for each of the braille cells. For the text-to-braille configuration conversion, the Raspberry Pi was used to take camera images and perform OCR using Python’s Tesseract library. After converting images into text, the Raspberry Pi uses the pybraille library to convert tests into Unicode braille, which is displayed on the live web server. For the Braille cells and PCB board configuration, we printed the Braille cells using an SLA 3D printer, scaling up the CAD file to attain a more precise resolution. The electromechanical component involves solenoids generating an electromagnetic field to rotate the cam, raising the Braille pin. The PCB board was regulated by an Arduino Nano, sending instructions to each smaller PCB holding a braille cell. In the future, both components will be combined to automate the process and connect the OCR to the device itself. Our testing design studies (as seen in the design study document) show that the developed aspects of the Braille display function as intended so far. Future improvements require a more precise 3D printer that can handle printing the small Braille cells in the correct resolution. Figure 2 details what the final, fully incorporated device will look like, combining both the optical and Braille display aspects. This is a more complex device our group will work towards during our further continuation of the project.