Home

CS

Physics

Math

Spanish

Stem

Stem II

Hum

STEM

In STEM, we work on our independent research project to present at February Fair. At the start

of the year, we brainstormed for our projects and practiced reading scientific literature. Once we had

chosen our project ideas, we researched with a required reading of at least 20 articles. Once background reading was done

we were ready to start working on our research or engineering project. We also have update meetings

both informal and formal with Dr. C where we discuss or present our progress on our project twice per term. Additionally,

we worked on a grant proposal assignment based on our project and some preliminary data. We presented preliminary data at December Fair

and then final data at February Fair.

Parameter-efficient fine-tuning of Large Language Models for Math Education

My project is about using artificial intelligence for education purposes. Specifically,

investigating the issues that large language models have with producing correct answers at math problems.

I am tackling grade-level math word problems because those are often encountered by students in standardized tests,

for example. Also, I trained a model using parameter-efficient fine-tuning, which is a broad idea that deals

with saving on resources to train the model. This is important to make AI more accessible to the average person

and save resources while still maintaining effectiveness. The application of this work would be allowing

students access to a trainer that can help them study.

Abstract

Artificial intelligence is used widely in professional and educational

domains but still has many shortcomings. This paper addresses the limitations

of large language models in solving math problems at a grade school level.

Prior studies have shown that students are receptive towards using AI, but have also outlined

specific failures of AI, such as simple calculation mistakes which lead to overall lower accuracy,

or confusing and verbose solutions that prevent students from using the full potential of AI.

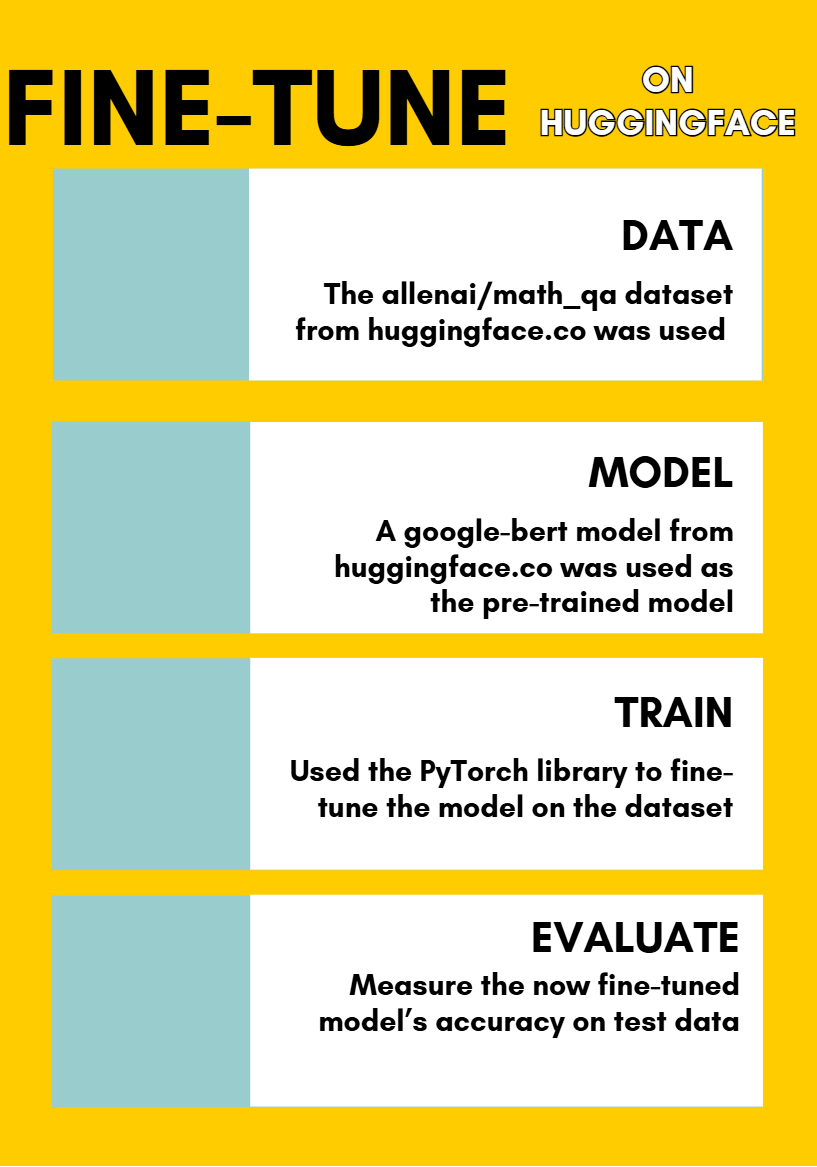

In the initial stages of this work, huggingface.co was used as it is the premier resource

for free AI models and its python library was deemed very user-friendly. An open-source

solution was preferred to keep costs down, and simple and small datasets were chosen.

The results showed that the model used was capable of learning how to solve problems

specific to the dataset. However, this only worked because the dataset was not diverse:

the model overfitted to the data. In the next phase, a larger, more diverse dataset was

used, which increases computational costs beyond what is feasible on most personal computers.

To offset this, parameter efficient fine-tuning methods were employed, and cloud hosting

was used for the model to ensure there are no limitations for testing. The results showed

that this model is capable of accurately solving a diverse set of math problems.

The application of this work is that the model is useful in the educational sphere

as a tool to help students study math in an ever-changing educational world.

Problem Statement

Large language models have poor accuracy in solving math problems

Engineering Goal

Fine-tune large language models to improve their accuracy while saving on computation required

Background

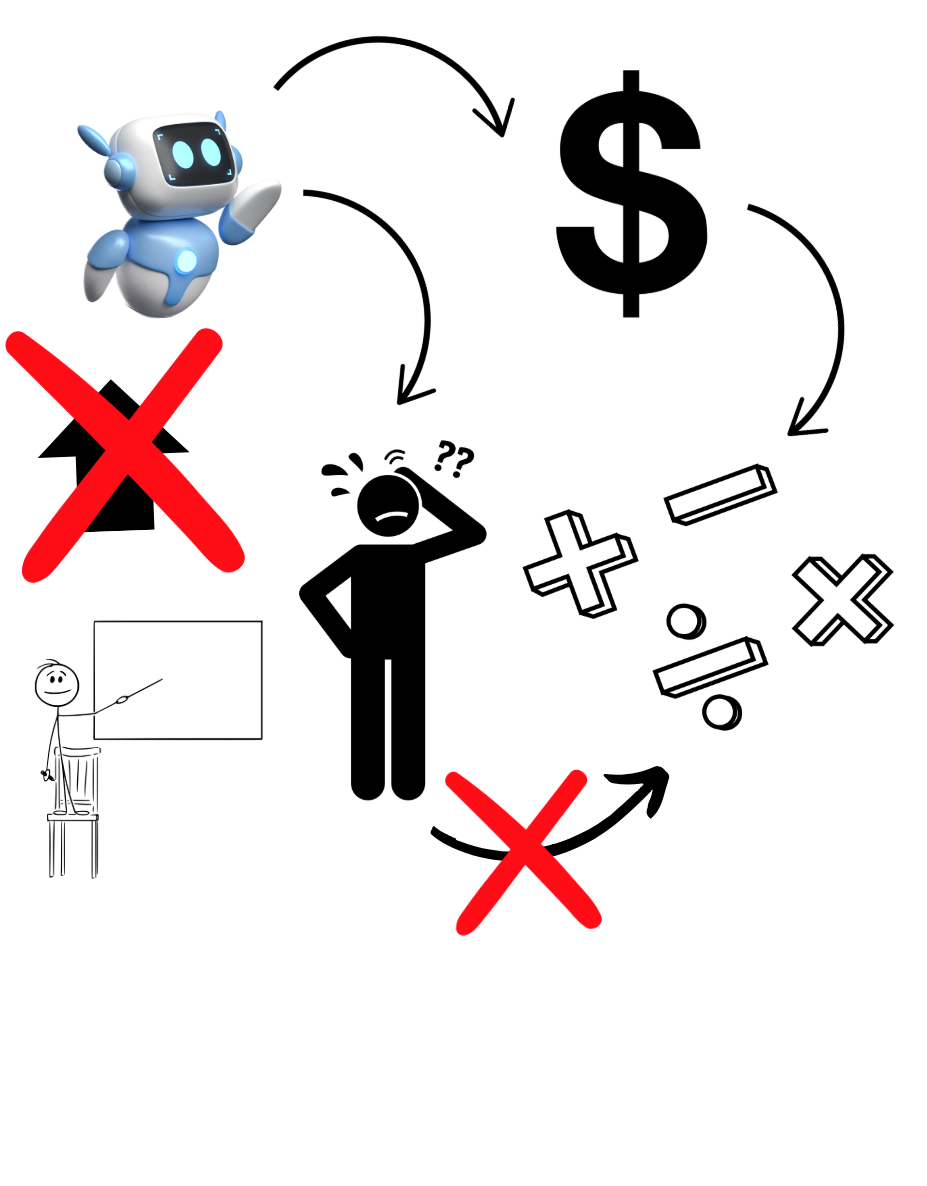

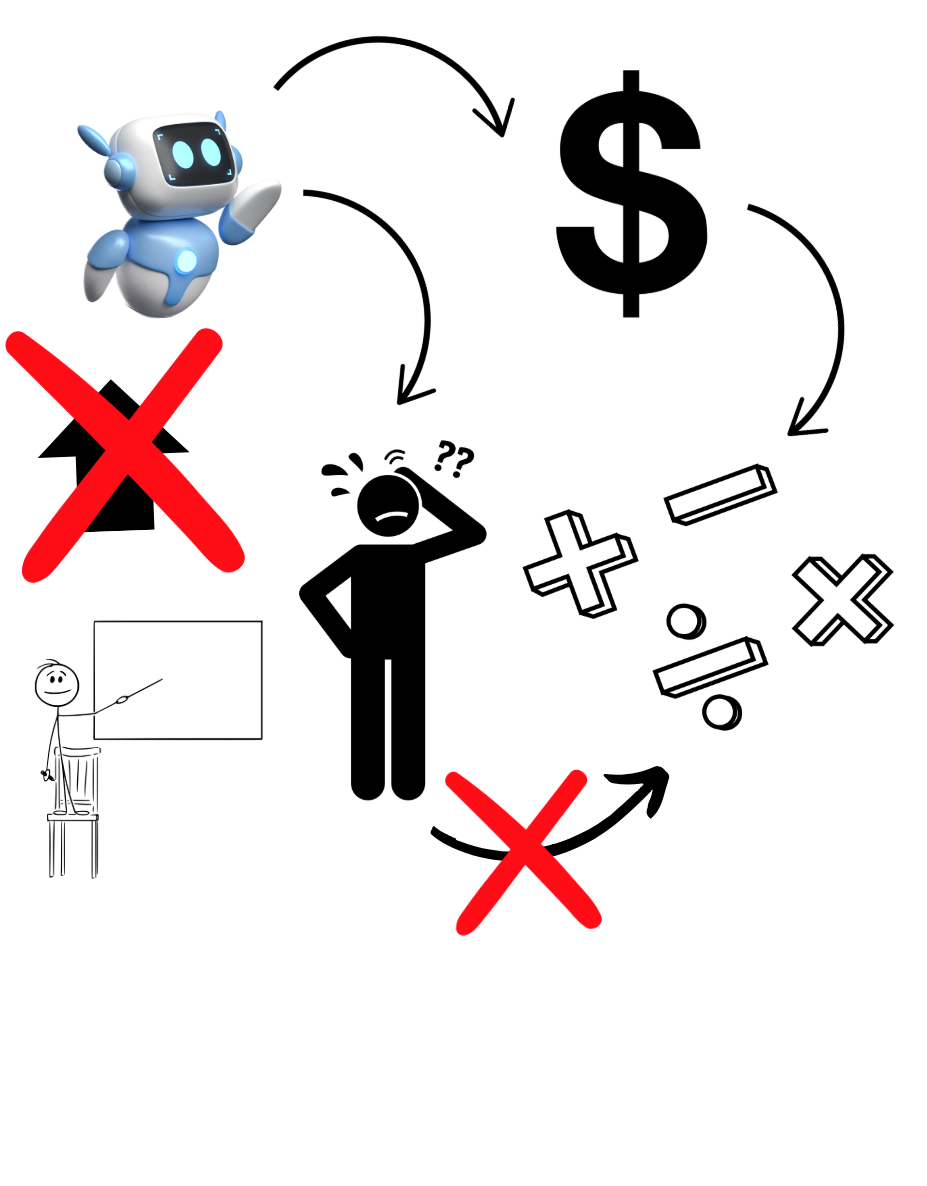

Educational professionals are interested in harnessing the capabilities of artificial intelligence.

However, many barriers exist that prevent these people from using AI’s full potential.

For example, these tools are inaccessible or difficult to use for many (Xie et al, 2021).

Moreover, shortcomings in language models in domains such as mathematics cause them to be

insufficient tools for educational purposes (Dao & Le, 2023).

Competitors in the space of AI in math education are rare.

The premier product for this purpose is Khanmigo, Khan Academy’s AI tutoring assisstant

which uses GPT-4, an extremely powerful model owned by OpenAI (Ofgang, 2024).

While it is helpful for students, it is not perfect. For instance, Khanmigo is not a free tool

and users must pay a subscription to access it in most cases. While this might be expected,

it is indicative of the computational cost of such an accurate model. Khan Academy is a

nonprofit organization that makes all of their resources available for free.

Because even they must charge users to use Khanmigo, it is clear that Khanmigo

demonstrates how costly it is to make state-of-the-art large language models

that are applicable to math education. The most important gap that prevents

AI from being used in the educational sphere is its lack of accuracy. For example,

in a study of ChatGPT’s ability to solve high school level math problems from

Vietnam’s national graduation exam, they found that it had lower accuracy on

higher level questions. Easier questions of the category “Knowledge” and “Comprehension”

were answered with accuracy ranges of 75-90% and 40-72% respectively, depending on the year.

But the hardest types of questions were answered at 0% depending on the year (Dao & Le, 2023).

Clearly, even high quality large language models such as ChatGPT struggle at this domain.

Fine-tuning a model such as this to math problems would allow it to achieve much higher

accuracy on math problem datasets.

Procedure

This project only requires the use of a personal computer. All resources can be accessed using it.

The specific laptop used is a Yoga Pro 9i with an NVIDIA GeForce RTX 4050 graphics card.

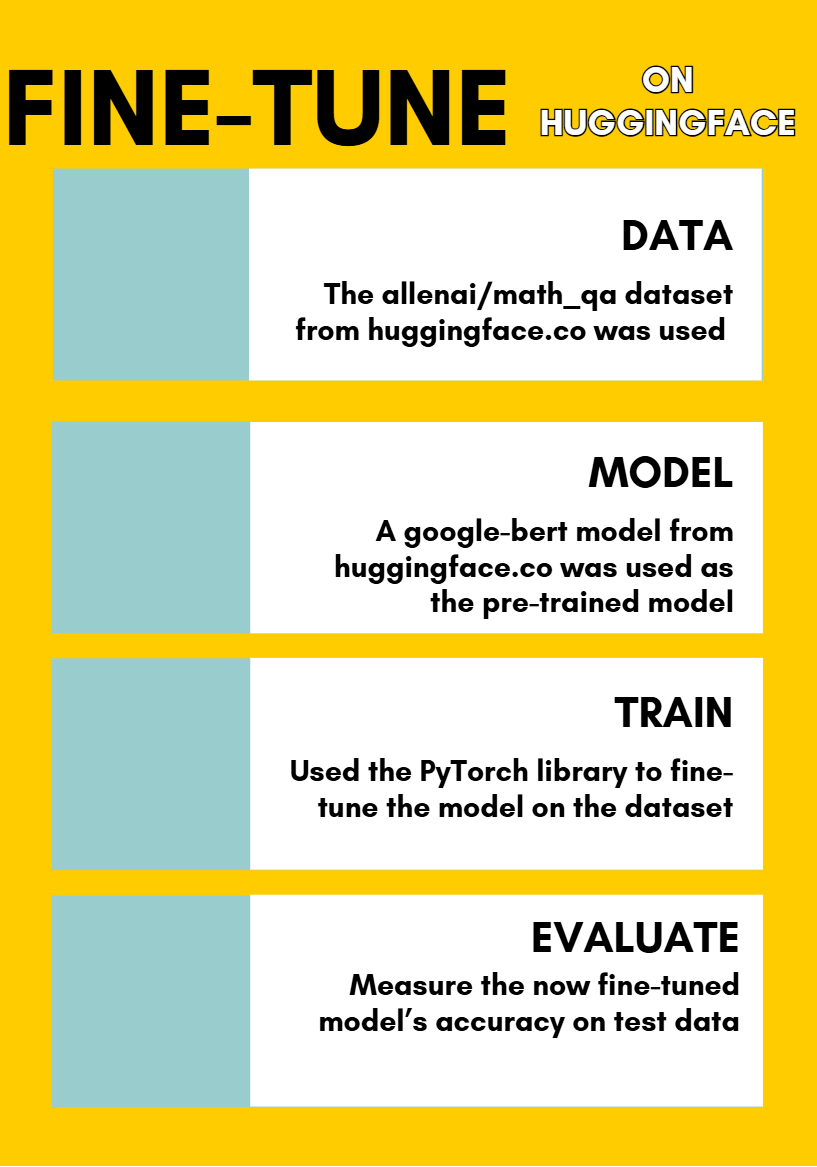

The Hugging Face Hub was used to train the model selected. Hugging Face hosts models from many top

AI competitors, such as OpenAI and Google. For this project, a Google-BERT (Bidirectional encoder

representations from transformers) model was used. The Hugging Face transformers model was used to

train this model. Transformers allows users to easily fine-tune pretrained models using PyTorch

or Tensorflow, which are machine learning python libraries (Hugging Face, 2024). Transformers supports

parameter-efficient fine-tuning methods and total fine-tuning. Additionally, the Hugging Face

Evaluate library was used to evaluate both models.

The Hugging Face Transformers library was used to train the model.

The dataset allenai/math-qa was used (Ai2, 2024). It is a dataset that contains math

multiple choice problems and includes a problem, explanation, answer options, and the

correct answer for each entry. The Hugging Face multiple choice tutorial was used as

rough guidance and was adapted to the domain of math problems (Multiple choice, 2025). The code

loads the dataset, preprocesses it using a Google BERT tokenizer, then trains the Google BERT model

on that data.

Results

Analysis

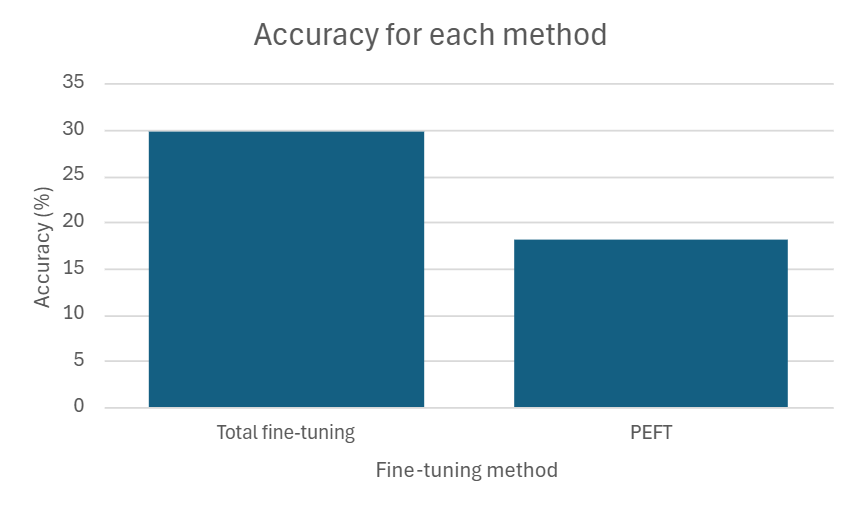

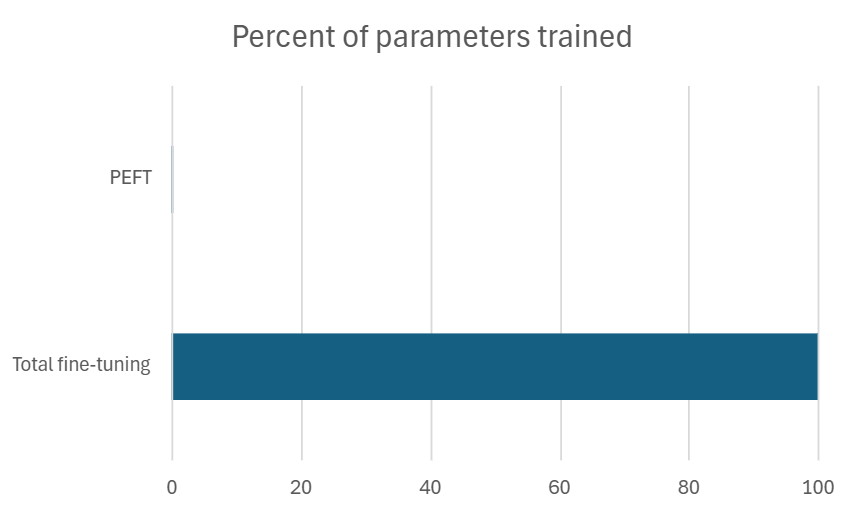

Using total fine-tuning resulted in an accuracy of 29.9% on the test data while parameter-efficient fine-tuning (PEFT)

had an accuracy of 18.1%. However, the PEFT model only used 0.2721% of the total parameters to train the model. There

was much less computational requirements for the PEFT model. The accuracy was determined by testing each model against

1000 test questions from the dataset used. A two-proportion z-test was ran which resulted in a z-score of 6.17 and a p-value of

3.25 * 10^-10, meaning that there is convincing evidence that the PEFT model performs worse than the total fine-tuned model.

Conclusion

The goal of saving on computational costs was achieved, as the PEFT model required fewer parameters than the total fine-tuned model.

Also, it could train in a batch size of 32 on the personal device, while the total fine-tuned model needed a batch size of 8. However,

the accuracy of both models was lackluster. Future steps would be including Chain-of-thought reasoning to improve the model's performance.

This could not be implemented due to time constraints but has shown promise in past research. Chain-of-thought is when a model is guided

to produce intermediate steps before coming to the answer. In this case, it could be prompted to output a reasoning and then train the multiple choice

model on that reasoning.

References

Ai2. (2024, August 13). math_qa. Huggingface.co. https://huggingface.co/datasets/allenai/math_qa

Dao, X.-Q., & Le, N.-B. (2023, October 10). Investigating the Effectiveness of ChatGPT in Mathematical Reasoning and Problem Solving: Evidence from the Vietnamese National High School Graduation Examination. ArXiv. https://arxiv.org/pdf/2306.06331

Hugging Face. (n.d.). 🤗 Transformers. Huggingface.co. https://huggingface.co/docs/transformers/index

Multiple choice. (2025). Huggingface.co. https://huggingface.co/docs/transformers/tasks/multiple_choice

Ofgang, E. (2024, April 17). What is Khanmigo? The GPT-4 Learning Tool explained by Sal Khan. TechLearningMagazine. https://www.techlearning.com/news/what-is-khanmigo-the-gpt-4-learning-tool-explained-by-sal-khan

Xie, H., Hwang, G. J., & Wong, T. L. (2021). Editorial Note: From Conventional AI to Modern AI in Education: Re-examining AI and Analytic Techniques for Teaching and Learning. Educational Technology & Society, 24(3), 85-88. https://doi.org/10.30191/ETS.202107_24(3).0006