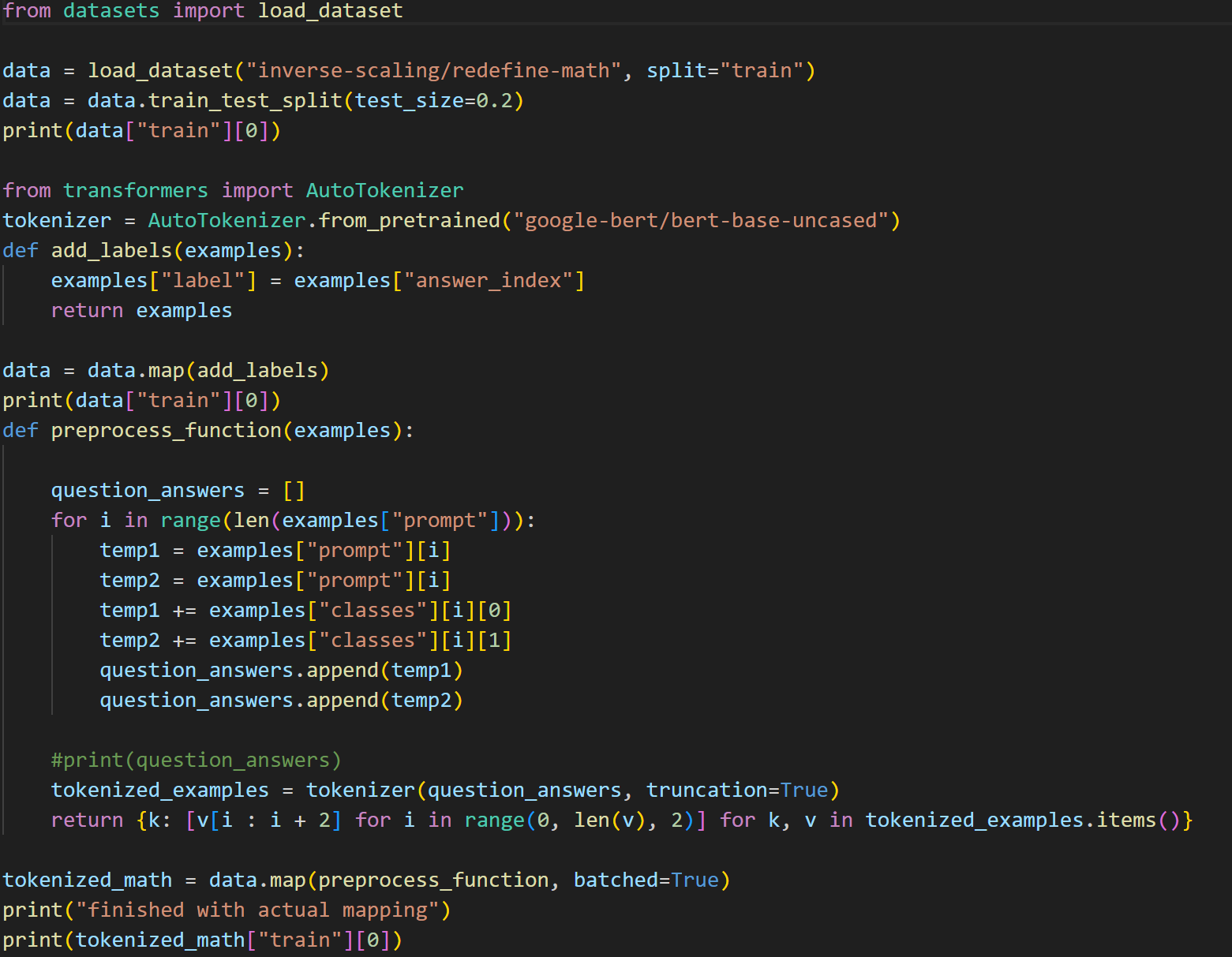

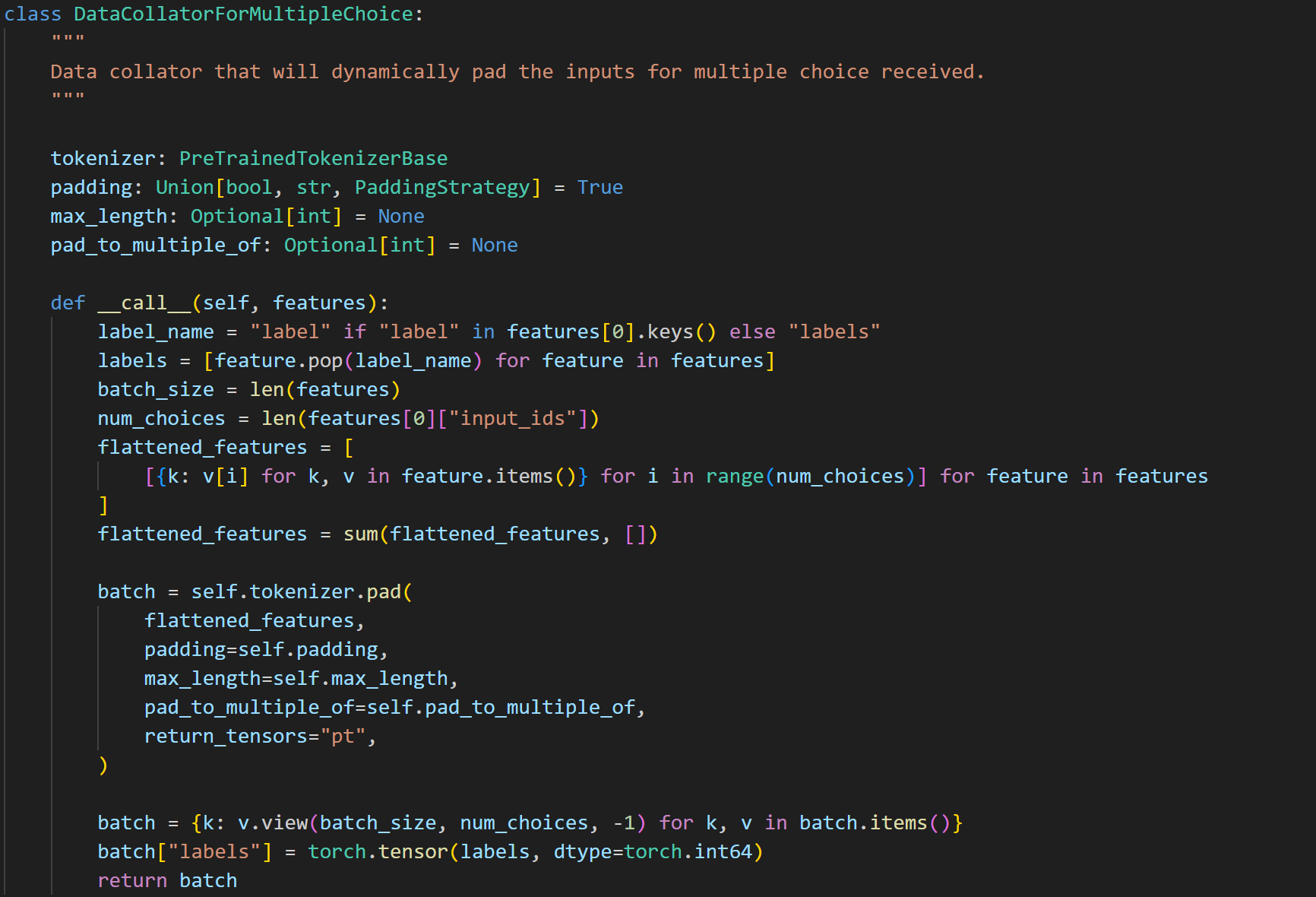

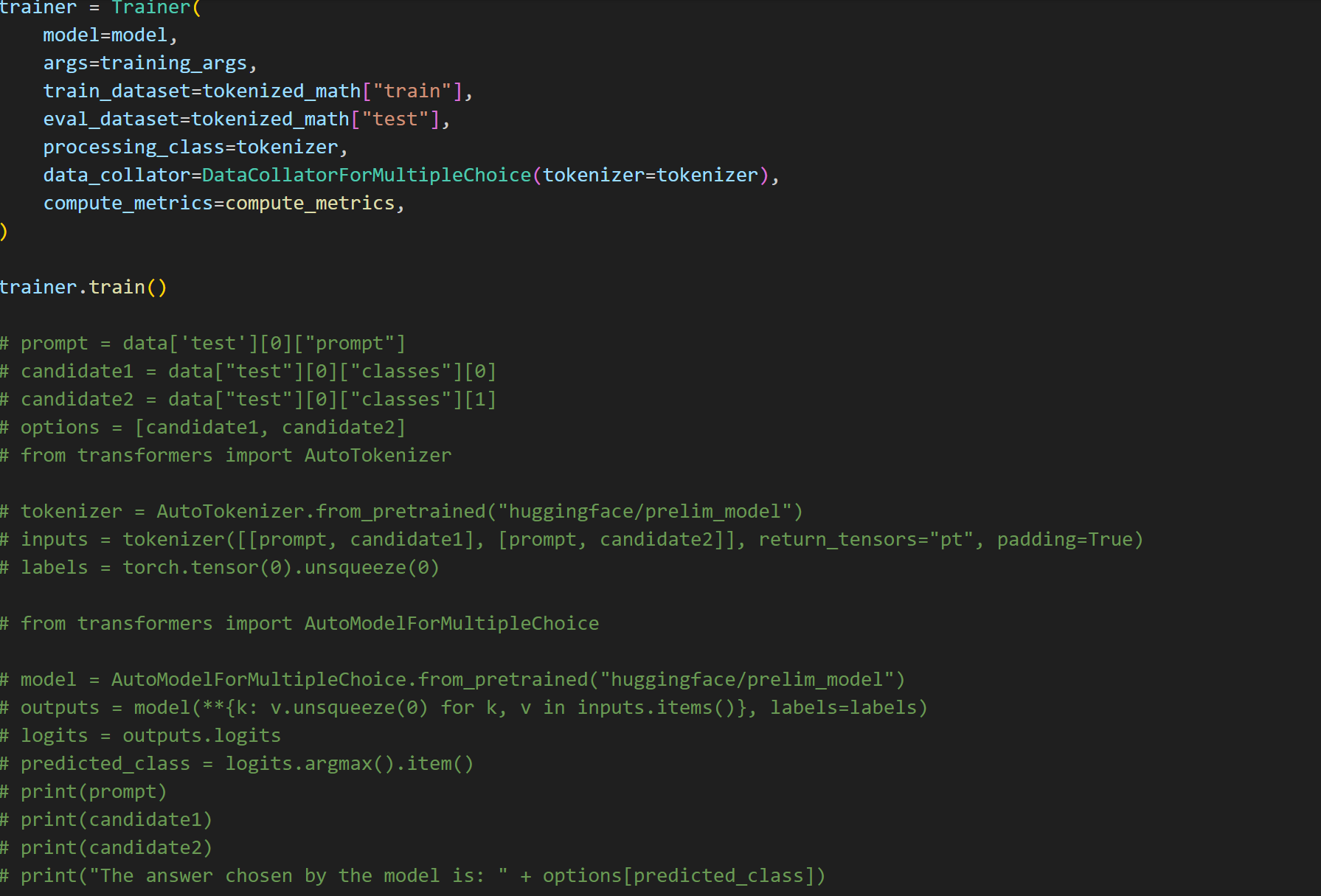

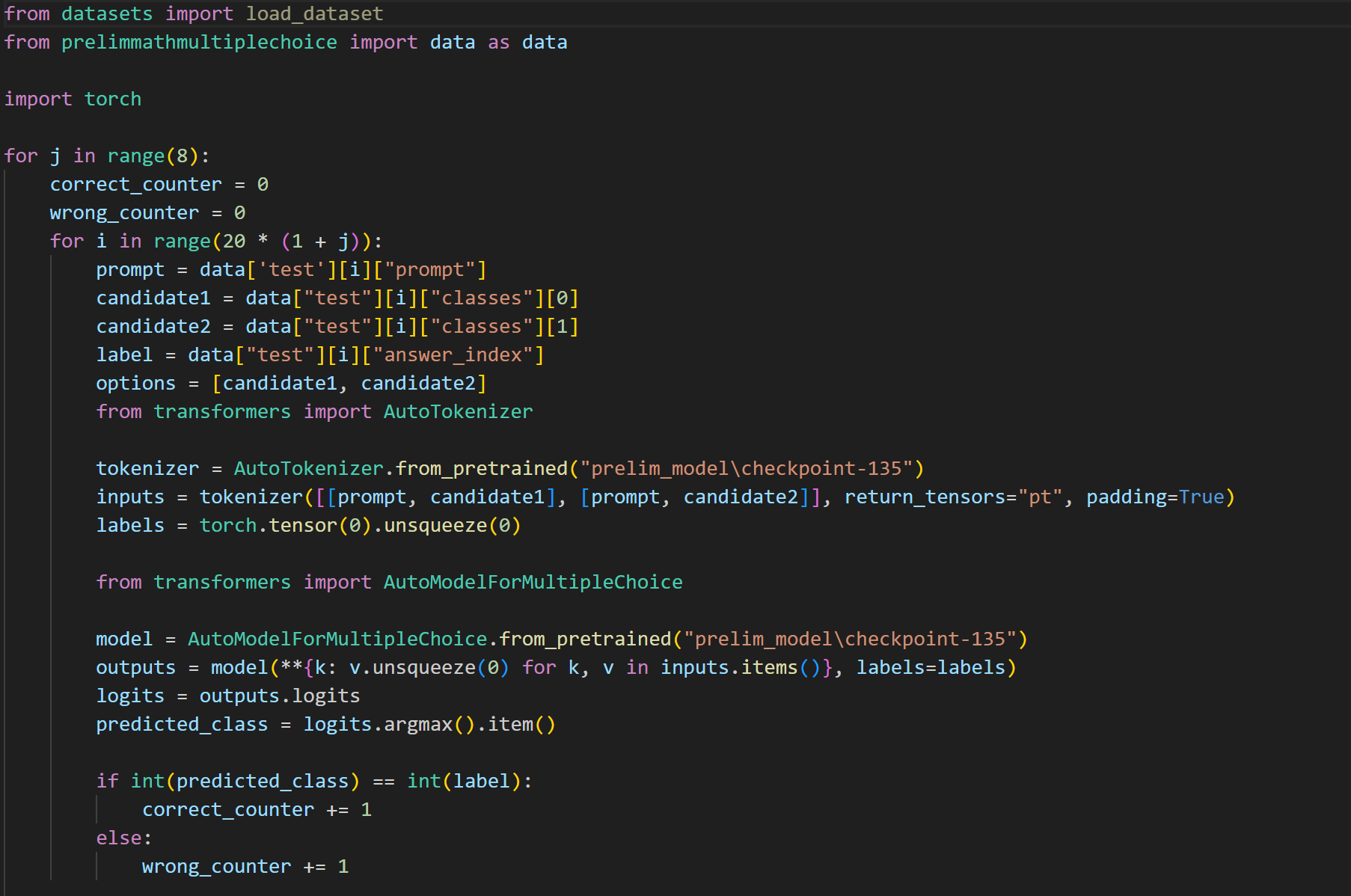

My project involved fine-tuning a large language model to increase its performance at math problems while maintaining cost efficiency. In the past, large language models have struggled a lot with this specific domain. They often give incorrect answers or overly verbose reasoning. But by fine-tuning a large language model on math-specific data, we can increase its accuracy at math problems. However, these models are very large so for the average person, it is often difficult to work with them on a local device. That is why I employed parameter-efficient fine-tuning, which is when you fine-tune a model without adjusting all of the original parameters. Since these models have billions of parameters, this becomes very important. I chose to fine-tune a model on a dataset of multiple choice math problems at the grade school level. Past research has not looked much at multiple choice problems, and this work would have applications in tutoring and helping students. Many standardized tests use multiple choice in their curriculum so students would be interested in a multiple-choice based product to help them. Also, by using parameter-efficient fine-tuning , we save on computation and show that it doesn't have to be very taxing to train these models.