MVP

MediSign is a mobile app that aims to provide medical

professionals with streamlined access to ASL signals to facilitate

communication with deaf or hard-of-hearing patients during

appointments. The app makes use of three main features to do so:

presenting the user with a list of common questions, symptoms, and

responses that might come up during check-ins, retrieving the

corresponding ASL signals from a database once the user selects an

option, and displaying the signals through a sequence of videos.

Supported platforms include Android and iOS. Technologies utilized

include React Native and Expo for the user interface and Firebase

for database management.

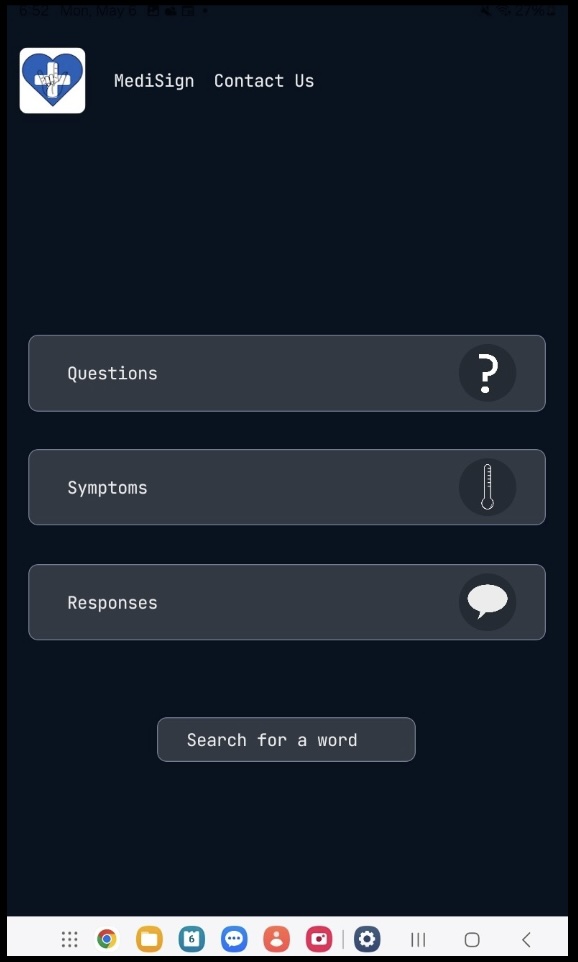

Firstly, the user is given common questions, symptoms, and

responses to choose from. The list of questions and symptoms is

sourced online from Horizon Health Care, Nova Southeastern

University, and the Royal College of Obstetricians and Gynecologists

(Horizon Healthcare, n.d.; Nova Southeastern University, n.d.; Royal

College of Obstetricians and Gynecologists, n.d.). The sample

responses consist of yes, no, and maybe for the user to understand

patient answers. Upon opening the app, the user is greeted with a

menu of these three categories (questions, symptoms, and responses)

to choose from, after which they will be redirected to a

corresponding list of items in the category they chose. This menu is

organized and formatted using React Native, with navigation

functionality from React Navigation.

Once the user selects a category, the corresponding list for

that category is read from Firebase. A Firebase Firestore database

type was chosen for persistent storage to facilitate organization,

scaling, and efficiency in code. In Firestore, the data is separated

into three collections for each category. Each collection is a set

of documents that contain values for the questions, symptoms, and

responses collected with either a corresponding videoId for the ASL

sequence or a reference image. After being retrieved with Firebase

Modular SDK, the list of questions, symptoms, or responses is

formatted using React Native, being displayed as an array of buttons

for the user to choose from and obtain the ASL sequence.

After a question, symptom, or response has been chosen and

retrieved from Firebase, MediSign displays the ASL sequence to show

the user how to sign what they want to say. ASL sequences take the

form of videos sourced online from the public domain on YouTube.

These videos have IDs in their URL, which can be used to embed them

in React Native. These IDs are stored in the videoId property of

each document in Firebase. The react-native-youtube-iframe package

uses the videoId value to display the video. At the same time the

video is running, a reference image is displayed below the video for

the general care provider to show the patient in case there are

communication struggles. These reference images are also sourced

from the public domain and correspond to the question, symptom, or

response.