Computer Science is taught by Mrs. Taricco. At the beginning of the year, we learned some HTML (HyperText Markup Language) and CSS (Cascading Style Sheets) in order to make these websites.

Currently we are learning Java, which is an object-oriented programming language. Some of the topics that we have covered this year include AWT graphics, boolean logic, iterations, static arrays, and array lists.

We also take ACSL (American Computer Science League) contests throughout the year.

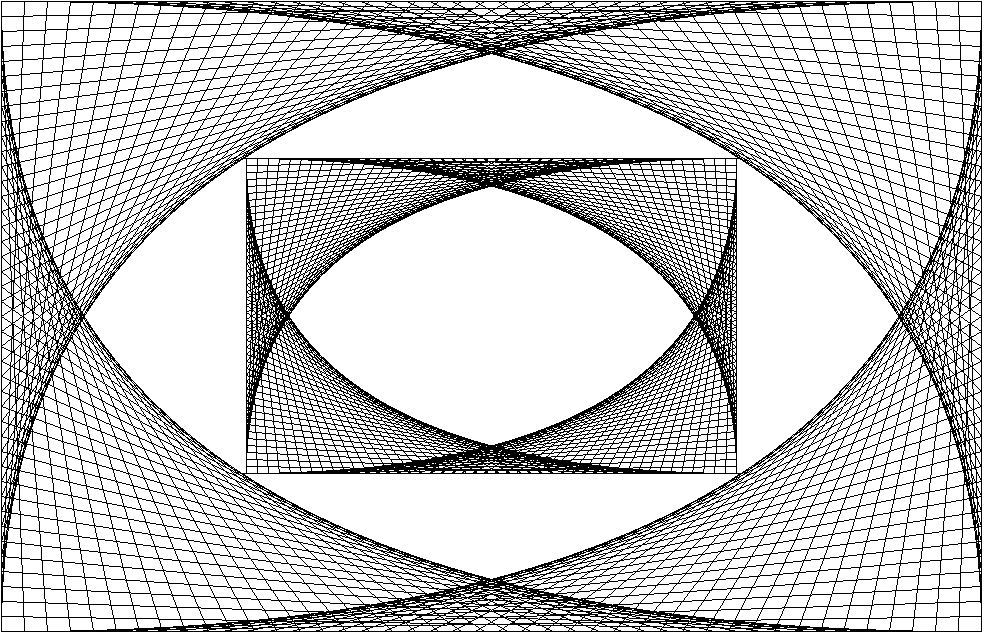

One of the AWT graphic labs that we completed was the line art lab. In order to create this, we had to use a loop structure in order to write a program

that drew straight lines inside a rectangle, which ultimately created the picture shown to the left. The extra credit version of the lab required a smaller version of the graphic that was tangent to the

original drawing.

In one of the ArrayList exercises, we had to model a game of Bulgarian Solitaire. The game begins with a triangular number of cards, and they are divided into a random number of piles that consist of a random

number of cards. In every round of the game, you take one card from each pile, and create a new pile with these cards. The game is over when the piles have a size of 1, 2, 3, 4... cards in some order. In order to create the

program, we had to create a random starting configuration of cards and then play the game until it ends, printing the final result.

In Apps for Good, I worked on a team with Alex Sun and Ishita Goluguri. Our group developed an app called Echolocate, which is a program

designed to implement speech-to-text translation to display dialogue in a speech bubble above the speaker’s head,

in order to assist people with hearing loss who may struggle to participate in group conversations.

Problem - While some deaf people may learn to read lips, even the most accomplished lip readers are only able to understand 25-30% of the conversation without context. In addition, only one million people in the USA use American Sign Language, making it difficult for people suffering from hearing loss to have any way of participating in a typical conversation, especially in group settings.

Audience - Hearing impaired or deaf people that may have trouble following conversations.

In order to solve this problem, we researched current apps like AVA and other speech-to-text software. Ultimately, we ended up using the following API's in our product:

Android Speech API, Android Camera X API, and the Firebase ML-Kit Face Detection API. Our group also developed our "Who's Talking" algorithm, which is the basis the part of our program

that recognizes the speaker in the conversation. The algorithm follows the following steps: (1) Any face within the frame of the camera is detected, (2) a box is drawn around each person’s face and the person’s mouth,

(3) The height of both boxes is determined, (4) The ratio of the mouth height to face height is calculated, and (5) The person with the largest ratio is the one who is talking. Included below is our presentation file that we

used to present our project during the Apps for Good fair.