STEM

Impact of Retracted Journal Articles: Online COVID-19 Misinformation

Overview

In STEM (Science, Technology, Engineering, Mathematics), the main focus of the class up to February Fair is on developing the skills necessary to complete a scientific, engineering, or mathematical project. The culmination of our time spent brainstorming over the summer, researching our topic thoroughly in scientific journals, and designing and completing experimentation is at February Fair, where we present our research to 6 judges who evaluate our projects. This page is an overview of my project on the Impact of Retracted Journal Articles: Online COVID-19 Misinformation.

Abstract

A desire to get research relating to COVID-19 out quickly has resulted in less due-diligence in the scientific process, thus allowing research not up to par with scientific standards to slip through, and subsequently contributing to misinformation online about COVID-19. When these research articles are identified, they are usually issued a notice of retraction; however it is unclear whether or not this is effective in terms of changing public perceptions online. It is hypothesized that if the overall sentiment towards retracted scientific journal articles (RSJAs) relating to COVID-19 on Facebook does not change post-retraction, then RSJAs will continue to contribute to misinformation surrounding COVID-19 even after retraction. To test this, the website Retraction Watch was used to obtain a list of RSJAs relating to COVID-19 and each article in the list screened for eligibility. After screening, the context and date of mentions on Facebook for each RSJA was obtained through Altmetric. Using Sentistrength, the sentiment of the context was quantified on a scale of positive to negative with a separation between contexts prior to and post-retraction. Out of a total 225 mentions on Facebook posts, 166 were prior to retraction and 59 were post-retraction The average sentiment for posts after retractions changed little compared to the average sentiment prior to retraction. The relatively low coverage of post-retraction articles as well as the fact that the sentiment does not change indicates that faulty science in the form of RSJAs does contribute to misinformation online, meaning better retraction processes are warranted.

Phrase 1

How are retracted scientific journal articles relating to COVID-19 influencing discourse about COVID-19 on Facebook?

Phrase 2

If the overall sentiment towards retracted scientific journal articles (RSJAs) relating to COVID-19 on Facebook does not change post-retraction, then RSJAs will continue to contribute to misinformation surrounding COVID-19 even after retraction.

Background Infographic

Background

The Information Age has provided misinformation and correct information alike the ability to disseminate throughout the public quickly. In fact, misinformation spreads faster than correct information on Twitter (Wang et al., 2019). As such, the topic of misinformation on social media is an area of increasing interest due to the increased prevalence of social media in modern day society (Ospina, 2019). One possible source of misinformation on social media is retracted scientific journal articles (RSJA). Through a trust in the institution of science, RSJA can spread misinformation by causing the general public to believe that they have the correct facts when they do not. RSJA also degrades the degree of trust that the general public has in the institution of science. In relation to COVID-19, rates of RSJA having to do with COVID-19is much higher than the general rate of RSJA in the body of science (Yeo-The & Tang, 2020). In fact, the website retractionwatch.com has dedicated a section to listing out the various COVID-19 related retractions. However, the degree to which these RSJA actually contribute to misinformation online has not been quantified yet. Doing so could provide evidence for how serious of a problem this is in terms of influencing the general public. One way to quantify the influence of RSJA is to compare the number and sentiment of mentions on social media for a defined list of RSJA.

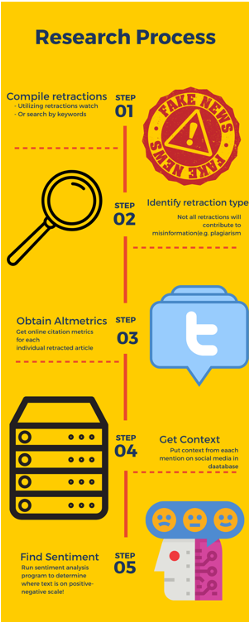

Procedure Infographic

Procedure

Obtaining dataset

Similar studies looking to analyze the sentiment of scientific journal articles generally performed keyword searches on the more prevalent databases like Pubmed. This method was also specialized to generate lists of RSJAs as most reputable databases note an article has been retracted. However, while researching, the website Retraction Watch was identified as a useful resource in creating the set of articles for this study. Other previous studies looking at retractions in science performed keyword searches as well as searches on Retraction Watch’s own database and were able to create a more complete set of retracted articles relating to a field; however, Retraction Watch had dedicated a page towards retractions relating to COVID-19 which contained a more comprehensive list of retractions on COVID-19 than retractions on other topics. Preliminary searches through the likes of Pubmed confirmed that the list on Retraction Watch covered RSJAs relating to COVID-19 pretty well. So, ultimately the set of articles as of January 23rd was used as a final dataset for the list of RSJAs relating to COVID-19. Dates of publication and retraction were also collected from Retraction Watch

Cleaning Dataset

Each RSJA in the list was not necessarily contributing to misinformation, so those that did not have anything to do with misinformation had to be removed. This was identified by looking at the reason for retraction. RSJAs were included if and only if they were retracted due to flawed methodology, faulty data, or misleading conclusions. RSJAs for which a reason for retraction was not stated were also not included.

Obtaining Context

For each RSJA in the list after cleaning through reason for retraction, Altmetric was used to obtain the context for mentions on Facebook. This was done by manually going through to each article's Altmetric page and collating the dates of mentions and the mentions themselves on a spreadsheet. Once this was done, the mentions were partitioned based on whether they were made prior to or post-retraction.

Sentiment Analysis

The program Sentistrength was downloaded from its website. The partitioned mentions were transferred from a google sheets file into a .txt file in order to be parsable by Sentistrength. Data cleaning had to be performed once again, this time all excess spaces, tabs, and new paragraph lines from each mention were removed through Excel functions. Default settings were used on the Sentistrength sentiment analysis.

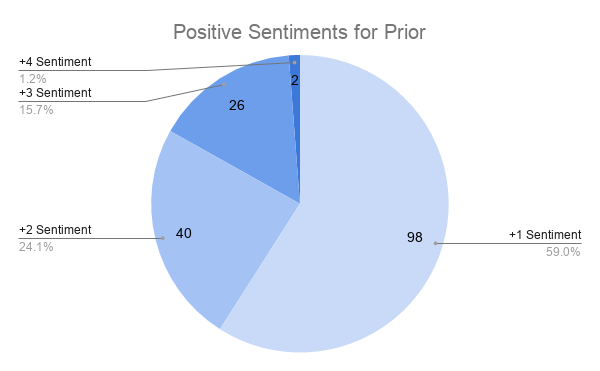

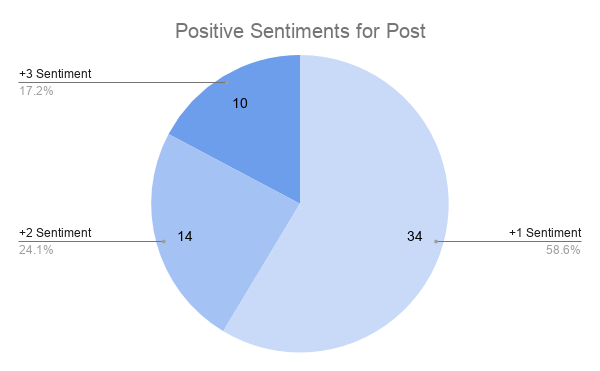

Figure 1

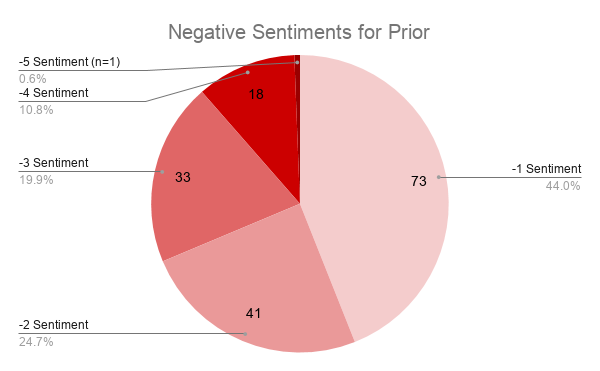

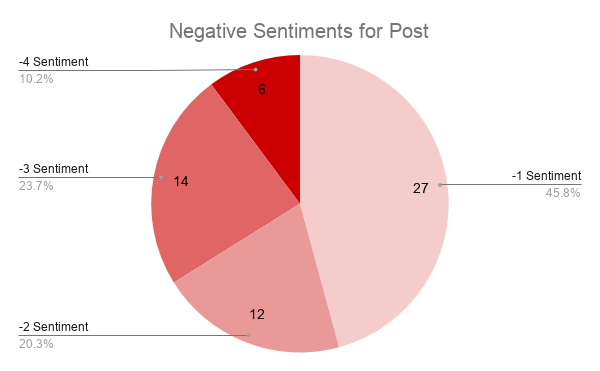

Figure 2

Figure 3

Figure 4

Analysis

Overall, the results indicate that retractions are negatively impacting the discussion of COVID-19 on Facebook. The number of mentions post-retraction is much less than those made prior to retraction which indicates that people are likely more aware of the articles existence before it is retracted and thus take the information from it to be true, but they are then less likely to notice when the article is retracted and thus unable to act accordingly. As well, the overall sentiment changes negligible compared prior and post retraction. The sentiments trend towards more neutral, which is in line with other research on the sentiment of tweets referencing scientific publications (Friedrich et al., 2015).

Given that the mean days taken for an article to be retracted was 27.5 days for the articles that were used after screening, and that the general time taken in the overall body of science is roughly 23.82 months, (Steen et al., 2013) this can mean two things: The increased interest in COVID-19 publications has resulted in higher degrees of scrutiny as well, subsequently resulting in subpar research being identified and removed quickly; or, COVID-19 publications have not been in circulation for enough time for all of the subpar research to be identified and thus, there may be much more COVID-19 related research that ends up being retracted.

Discussion

Ideally analysis would have been done on Twitter tweets rather than Facebook posts. Sentistrength is optimized and more accurate for shorter texts, which is perfect for what Twitter is given the character limit. However, Facebook does not have any character limits and there were lengthy posts in the dataset that may make results less accurate given that Sentistrength is not as well equipped to deal with them. What this does not change is the fact that there are still lots of neutral sentiments towards research both prior and post retraction. This is because longer texts will most likely result in higher sentiments given that they are more likely to contain an emotional word and thus be interpreted as such by Sentistrength. Also, some of the articles that were deemed eligible after screening had no Facebook mentions at all (n = 2), and some only had one (n = 2), which is not ideal for comparing sentiments prior and post citation.

Future work will ideally be able to perform more automated forms of analysis on a larger corpus of Twitter tweets. Additionally, because of the small sample size, not all emotional words that would have had a large bearing on the sentiment determined by Sentistrength but not necessarily be negative in the context (i.e. disease) would have been identified because of the smaller sample size. Had analysis been done on Twitter, these such words could have been identified and removed from the emotional words lookup of the Sentistrength program.

In all, this study is a step towards evaluating the sentiment towards retractions on social media. Journals may have to do more to indicate that an article has been retracted, as it seems the current procedure is unable to change the overall sentiment online.

References

Friedrich, N., Bowman, T. D., Stock, W. G., & Haustein, S. (2015, July 7). Adapting sentimentanalysis for tweets linking to scientific papers. arXiv.org. https://arxiv.org/abs/1507.01967.

Ospina, E.O. (2019). The rise of social media. Our World in Data. Retrieved November 15, 2020, from https://ourworldindata.org/rise-of-social-media

Steen, R. G., Casadevall, A., & Fang, F. C. (2013, July 8). Why Has the Number of Scientific Retractions Increased? PLOS ONE. https://journals.plos.org/plosone/article?id=10.1371%2Fjournal.pone.0068397.

Wang, Y., McKee, M., Torbica, A., & Stuckler, D. (2019). Systematic Literature Review on the Spread of Health-related Misinformation on Social Media. Social Science & Medicine, 240, 112552. https://doi.org/10.1016/j.socscimed.2019.112552

Yeo-Teh, N. S., & Tang, B. L. (2020). An alarming retraction rate for scientific publications on coronavirus disease 2019 (COVID-19). Accountability in Research, 1-7. https://doi.org/10.1080/08989621.2020.1782203