About

4th year Ph.D. Student

Department of Electrical & Computer Engineering

Worcester Polytechnic Institute, MA, USA

Welcome to my website! I am pursuing my Ph.D. under the supervision of Dr. Bashima Islam at the at the Department of Electrical and Computer Engineering, WPI . I am a researcher in multimodal large language models (LLMs) and AI for health, focusing on developing multimodal LLMs with a special emphasis on sensor integration and creating deep learning models for digital health solutions..

My research focuses on developing deep learning model to study behavioral development in infant through speech, audio, video, motion sensor and ECG data and creating digital health solutions for young adults using wearable IMU and audio data. I investigate how multi-modal models can effectively perform critical tasks by seamlessly integrating diverse data sources such as audio, video, and sensor inputs. In particular, I emphasize the importance of efficient modality switching for egocentric perception and for infantcentric environment anaysis, aiming to advance the ability of AI systems to understand and interact with dynamic, real-world scenarios.

My reserach has been published in top conferences like EMNLP, IMWUT, INTERSPEECH, IEEE BSN, EWSN.

Prior to joining WPI, I completed my Bachelor's in Electrical and Electronic Engineering from Bangladesh University of Engineering and Technology (BUET).

Please take the time to visit my website to learn more about myself, my research, and my professional experiences. Whether you are a fellow engineer, researcher, potential collaborator, or just interested in talking to me about something, please feel free to contact me via email.

News

RAVEN got accepted at EMNLP Main Conference, 2025.

LLASA got accepted at Ubicomp, 2025.

LOCUS got accepted at EWSN, 2025.

Mindfulness Meditation and Resipration got accepted at IMWUT, 2025.

Our paper QUADS got accepted at INTERSPEECH, 2025.

Received Master of Science in Electrical and Computer Engineering from WPI.

Passed Ph.D. diagonstic exam.

InfantMotion2Vec got accepted at IEEE BSN, 2024.

Our paper got accepted at IEEE CHASE, 2024.

Started Working as Graduate Teaching Assistant at BASH Lab, ECE, WPI.

Started Working as Graduate Research Assistant at Dept. of ECE, WPI.

I will be starting my Ph.D. at BASH Lab, ECE, WPI.

Education

Thesis: Surface EMG Based Hand gesture Recognition using Discrete Wavelete Tarsformation

Publications

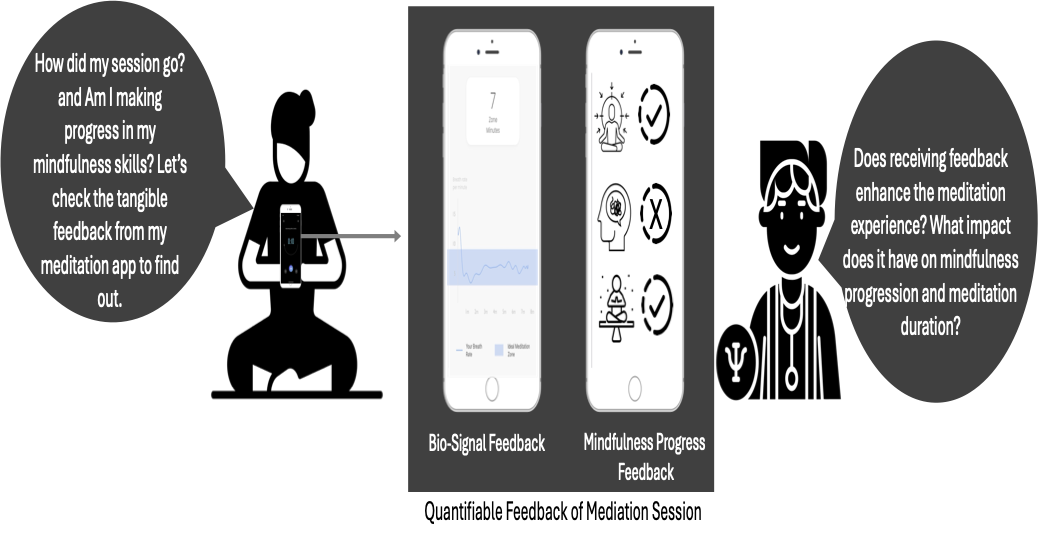

Mindfulness Meditation and Respiration: Accelerometer-based Respiration Rate and Mindfulness Progress Estimation to Enhance App Engagement and Mindfulness Skills

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT)

Mindfulness training is widely recognized for its benefits in reducing depression, anxiety, and loneliness. With the rise of smartphone-based mindfulness apps, digital meditation has become more accessible, but sustaining long-term user engagement remains a challenge. This paper explores whether respiration biosignal feedback and mindfulness skill estimation enhance system usability and skill development. We develop a smartphone’s accelerometer-based respiration tracking algorithm, eliminating the need for additional wearables. Unlike existing methods, our approach accurately captures slow breathing patterns typical of mindfulness meditation. Additionally, we introduce the first quantitative framework to estimate mindfulness skills—concentration, sensory clarity, and equanimity—based on accelerometer-derived respiration data. We develop and test our algorithms on 261 mindfulness sessions in both controlled and real-world settings. A user study comparing an experimental group receiving biosignal feedback with a control group using a standard app shows that respiration feedback enhances system usability. Our respiration tracking model achieves a mean absolute error (MAE) of 1.6 breaths per minute, closely aligning with ground truth data, while our mindfulness skill estimation attains F1 scores of 80–84% in tracking skill progression. By integrating respiration tracking and mindfulness estimation into a commercial app, we demonstrate the potential of smartphone sensors to enhance digital mindfulness training.

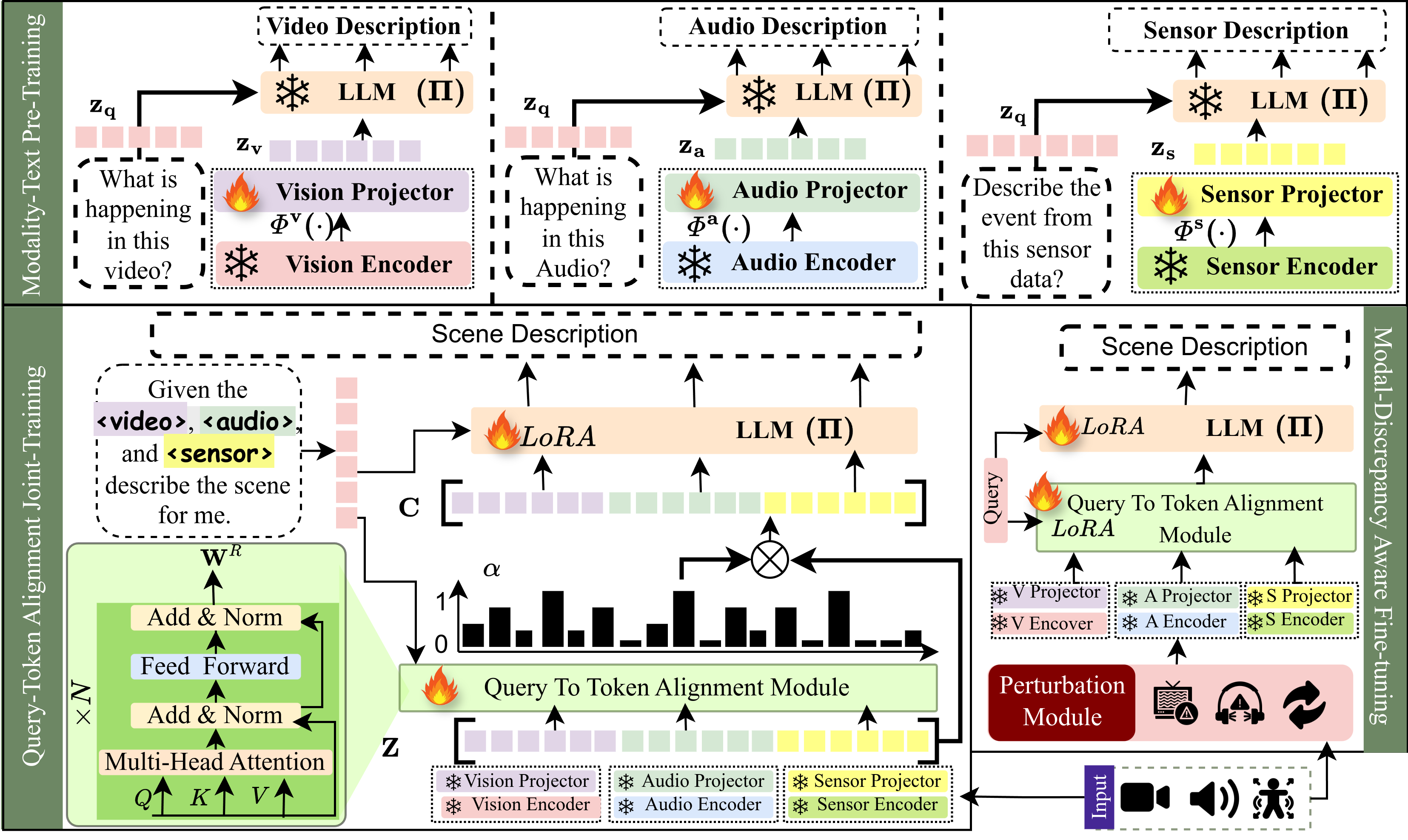

RAVEN: Query-Guided Representation Alignment for Question Answering over Audio, Video, Embedded Sensors, and Natural Language

Empirical Methods in Natural Language Processing (EMNLP'25) (main)

Multimodal question answering (QA) often requires identifying which video, audio, or sensor tokens are relevant to the question. Yet modality disagreements are common: off-camera speech, background noise, or motion outside the field of view often mislead fusion models that weight all streams equally. We present RAVEN, a unified QA architecture whose core is QuART, a query-conditioned cross-modal gating module that assigns scalar relevance scores to each token across modalities, enabling the model to amplify informative signals and suppress distractors before fusion. RAVEN is trained through a three-stage pipeline comprising unimodal pretraining, query-aligned fusion, and disagreement-oriented fine-tuning - each stage targeting a distinct challenge in multi-modal reasoning: representation quality, cross-modal relevance, and robustness to modality mismatch. To support training and evaluation, we release AVS-QA, a dataset of 300K synchronized Audio-Video-Sensor streams paired with automatically generated question-answer pairs. Experimental results on seven multi-modal QA benchmarks - including egocentric and exocentric tasks - show that RAVEN achieves up to 14.5% and 8.0% gains in accuracy compared to state-of-the-art multi-modal large language models, respectively. Incorporating sensor data provides an additional 16.4% boost, and the model remains robust under modality corruption, outperforming SOTA baselines by 50.23%.

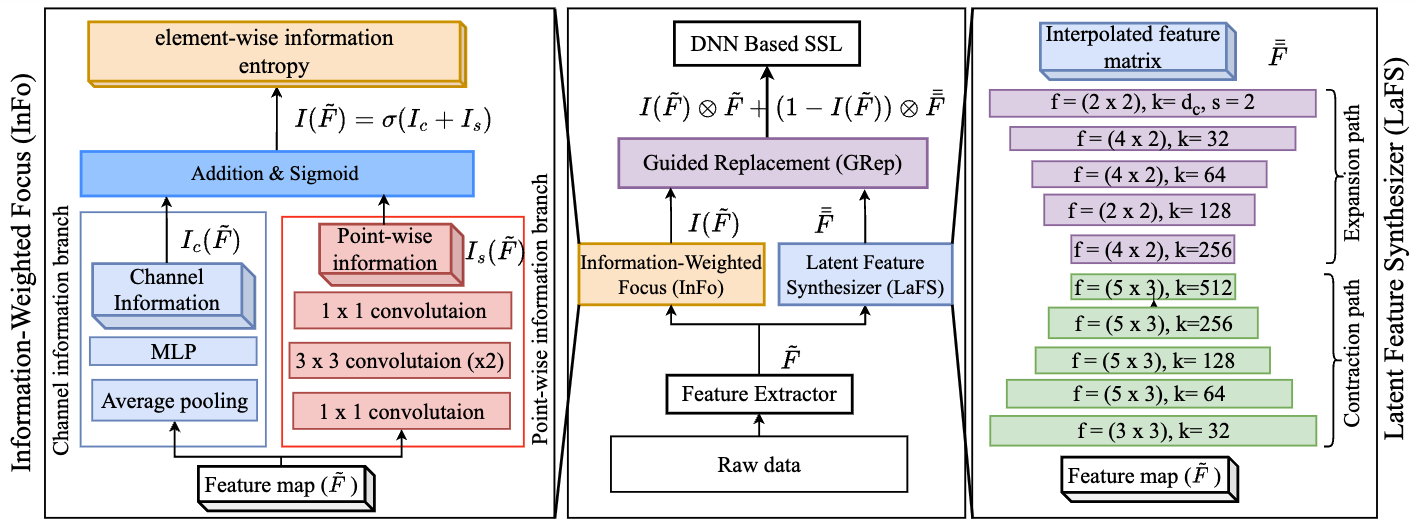

LOCUS – LOcalization with Channel Uncertainty and Sporadic Energy

International Conference On Embedded Wireless Systems and Networks (EWSN'25)

Accurate sound source localization (SSL), such as direction-of-arrival (DoA) estimation, relies on consistent multichannel data. However, batteryless systems often suffer from missing data due to the stochastic nature of energy harvesting, degrading localization performance. We propose LOCUS, a deep learning framework that recovers corrupted features in such settings. LOCUS integrates three modules: (1) Information-Weighted Focus (InFo) to identify corrupted regions, (2) Latent Feature Synthesizer (LaFS) to reconstruct missing features, and (3) Guided Replacement (GRep) to restore data without altering valid inputs. LOCUS significantly improves DoA accuracy under missing-channel conditions, achieving up to 36.91% error reduction on DCASE and LargeSet, and 25.87–59.46% gains in real-world deployments. We release a 50-hour multichannel dataset to support future research on localization under energy constraints

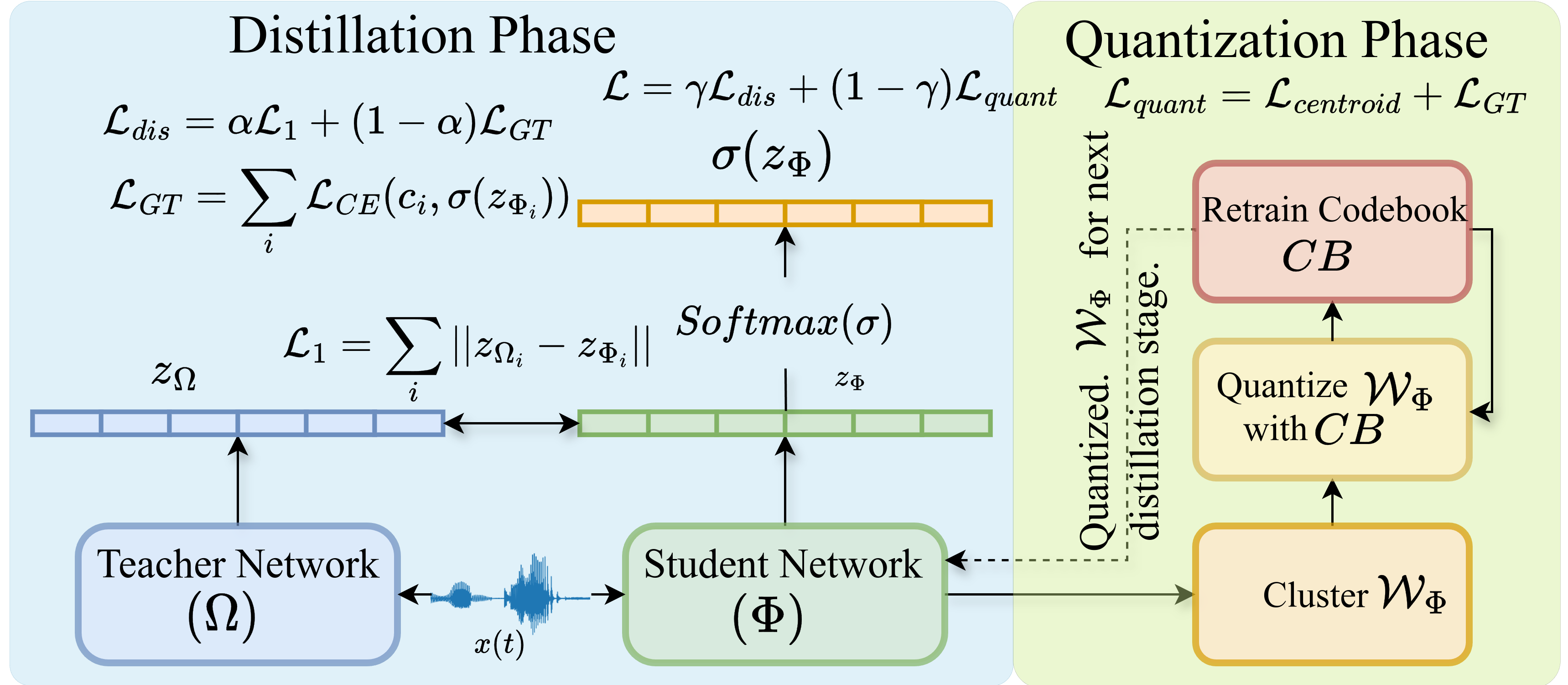

QUADS: QUAntized Distillation Framework for Efficient Speech Language Understanding

INTERSPEECH'25

Spoken Language Understanding (SLU) systems must bal- ance performance and efficiency, particularly in resource- constrained environments. Existing methods apply distillation and quantization separately, leading to suboptimal compres- sion as distillation ignores quantization constraints. We pro- pose QUADS, a unified framework that optimizes both through multi-stage training with a pre-tuned model, enhancing adapt- ability to low-bit regimes while maintaining accuracy. QUADS achieves 71.13% accuracy on SLURP and 99.20% on FSC, with only minor degradations of up to 5.56% compared to state-of-the-art models. Additionally, it reduces computational complexity by 60–73× (GMACs) and model size by 83–700×, demonstrating strong robustness under extreme quantization. These results establish QUADS as a highly efficient solution for real-world, resource-constrained SLU applications.

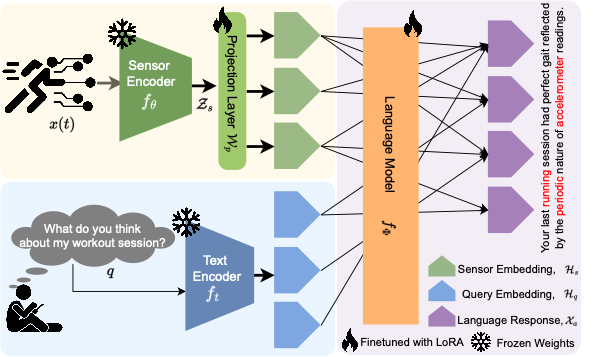

LLaSA: A Sensor-Aware LLM for Natural Language Reasoning of Human Activity from IMU Data

Ubicomp'25

Wearable systems can recognize activities from IMU data but of- ten fail to explain their underlying causes or contextual signif- icance. To address this limitation, we introduce two large-scale resources: SensorCap, comprising 35,960 IMU–caption pairs, and OpenSQA, with 199,701 question–answer pairs designed for causal and explanatory reasoning. OpenSQA includes a curated tuning split (Tune-OpenSQA) optimized for scientific accuracy, narrative clarity, and diagnostic insight. Leveraging these datasets, we de- velop LLaSA (Large Language and Sensor Assistant), a family of compact sensor-aware language models (7B and 13B) that gener- ate interpretable, context-rich responses to open-ended questions grounded in raw IMU data. LLaSA outperforms commercial LLMs, including GPT-3.5 and GPT-4o-mini, on benchmark and real-world tasks, demonstrating the effectiveness of domain supervision and model alignment for sensor reasoning.

Work Experience

Worcester, MA, USA

Worcester, MA, USA

Dhaka, Bangladesh

Contact

Email:

mkhan@wpi.edu, nurhossain2301@gmail.com

Call:

+1 774 519 0452